Does anyone else feel like we are living on a treadmill set to maximum speed right now?

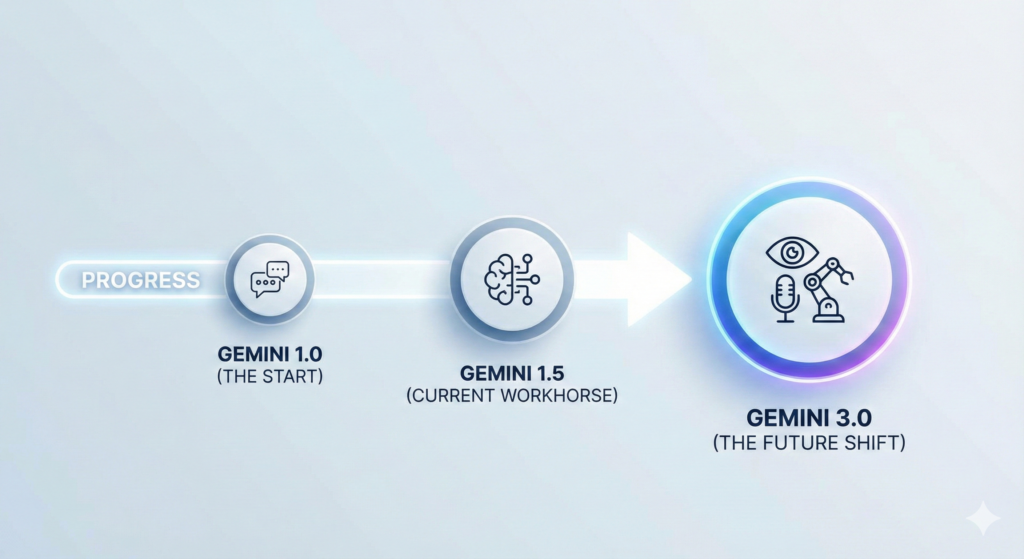

It feels like just yesterday Google announced Gemini 1.0, then suddenly we had 1.5 Pro with its massive context window, and now the rumor mill is already spinning up about Gemini 3.0.

If you’re getting “AI fatigue,” I get it. It’s hard to keep up. But if you’re a casual observer who just wants to know, “Is the next version actually going to be better, or just hype?” then keep reading.

I’ve been digging through the developer forums, leak accounts, and Google’s own cryptic hints to figure out what Gemini 3.0 is actually going to be.

Spoiler: I think this might be the update where AI stops feeling like a cool party trick and starts feeling like an actual, usable assistant.

Here is a human-readable breakdown of what we expect from Google’s next massive AI model.

It’s Not Just About Text Anymore (Seriously)

Right now, even with the best models, we are mostly still just typing in boxes. Sure, you can upload a photo and ask Gemini what it is, and it’s pretty good at that.

But Gemini 3.0 is expected to be “natively multimodal” on a whole new level.

What does that mean in plain English? It means it won’t just look at a static picture. It should be able to understand video and audio in real-time.

Imagine pointing your phone camera at a leaky faucet, and instead of just identifying the faucet, Gemini 3.0 watches the water drip, listens to the sound it makes, diagnoses the specific washer problem, and finds the exact YouTube tutorial to fix it, all in ten seconds.

We are moving from an AI that “reads” the internet to an AI that “experiences” the world like we do.

From “Helpful Chatbot” to “Digital Agent”

This is the buzzword you’re going to hear everywhere in the next year: Agents.

Right now, chatbots are passive. You ask a question, they give an answer. The interaction ends there.

The goal for Gemini 3.0 is to make it “agentic.” This means the AI can take your request, break it down into steps, and actually go execute those steps on the web.

- Current AI: “Hey Gemini, find me flights to Tokyo in May under $800.” (It gives you a list of links).

- Gemini 3.0: “Hey Gemini, book me the best flight to Tokyo in May under $800, use my saved credit card, and put it on my calendar.” (It actually does it, and just sends you a confirmation email).

If Google pulls this off, it turns Gemini from a slightly smarter search engine into a genuine personal assistant.

The Big Questions Everyone Is Asking (FAQs)

Look, nobody outside of Google’s deepest labs knows for sure, but based on how these things usually go, here are the answers to the most common questions about the next Gemini.

When is Gemini 3.0 actually coming out?

If I had to bet money? Late 2025 or very early 2026.

Google just pushed Gemini 1.5 Pro and Flash hard at their recent I/O event. They need time to let those models breathe. Building something as complex as 3.0 takes massive computing power and time. Don’t hold your breath for a release this year.

Will Gemini 3.0 be free?

Yes and no.

Google’s strategy is pretty clear now. There will almost certainly be a free version of Gemini 3.0 (maybe a “Flash” or “Nano” version) that replaces the current free tier. It will be fast and capable.

But the real magic—the super-smart, video-analyzing, agentic version—will almost certainly be locked behind the “Gemini Advanced” paywall (currently around $20/month). The best AI isn’t cheap to run.

How will this compare to GPT-5 or whatever OpenAI is doing?

This is the great tech rivalry of our time. It’s a leapfrog game. One company releases something amazing, and six months later the other one tops it.

Gemini 3.0’s biggest advantage will likely be its integration with the Google ecosystem. If it can flawlessly connect your Gmail, Docs, Calendar, and Maps together, it will be more useful to the average person than a slightly smarter standalone chatbot from a competitor.

It’s easy to get cynical about the endless hype cycle of AI.

But if Gemini 3.0 delivers on the promise of true “agents” that can actually do things for us, rather than just talk at us, it’s going to be a massive shift in how we use computers.

Until then, I’ll keep using the current version to help me write emails when my brain is tired.